Computational Projects

Download my CV as PDF by clicking here.

Multi-Modal Deep Generative Audio Source Separation

Download the source code

Download MSc thesis

My MSc thesis, supervised by Dr Brooks Paige, investigates the application of deep generative models for audio source separation, proposing a modified Bayesian Annealed Signal Source (BASIS). This method leverages variational autoencoders to compute deep generative priors used in noise-annealed Langevin dynamics to sample from the posterior over the sources given a mixed signal, enabling effective separation. A multi-modal method for incorporating visual information into Modified BASIS is also proposed, but fails to improve performance. Experiments using pop and chamber music show promising results, but suggest a sensitivity to in-class variability.

Monte Carlo Tree Search for Carcassonne

Download the source code

Download report

I implemented multiple variants of Monte Carlo Tree Search (MCTS) for the board game Carcassonne. The implementation significantly outperforms a human player. This project was realised as part of my Bachelor's thesis, which was supervised by Dr Malte Helmert and Dr Silvan Sievers.

Predicting SDG indicators using Deep Learning

Download report + code

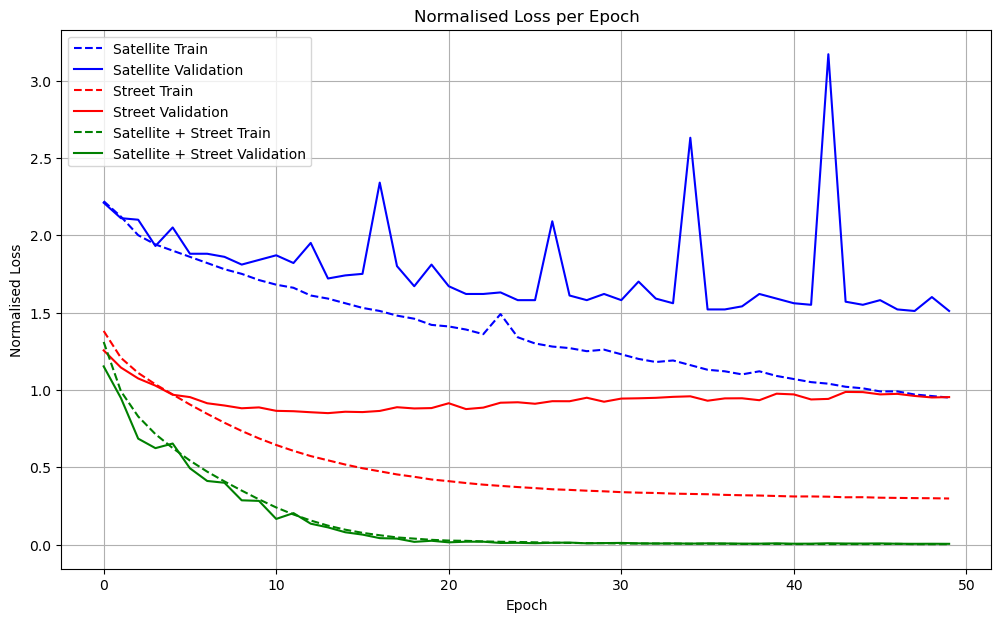

I trained a deep learning model to predict socio-economic data given street-level and satellite images. This was done in an effort to provide an inexpensive method of predicting the indicators of certain Sustainable Development Goals (SDG), a set of 17 goals proposed by the United Nations in 2015. I used the DHS dataset introduced as part of SustainBench, a suite of sustainability benchmarks targeting the SDGs. The dataset consists of geographical data points, each containing data on poverty, child mortality, women's educational attainment, women's BMI, water quality and access to sanitation, as well as one satellite and multiple street-level images of each location. I managed to outperform the benchmark prediction by a significant margin by training a model on a combination of street-level and satellite images. This project was realised as part of the seminar AI for Sustainable Development taught by Dr María Pérez-Ortiz.

Neuroevolution in Flappy Bird

Download the code

Evolution is responsible for intelligent life on earth. We can computationally model evolution over generations and use this to evolve intelligent behaviour. I demonstrated this on the game Flappy Bird, whereby I implemented a neural network library from scratch and used a genetic algorithm (i.e., simulated evolution) to find the parameters of neural networks controlling the birds. This little program thus manages to evolve a near perfect neural network for playing Flappy Bird in only a few generations.

Interacting Particles

Download the code

Interacting particles can be used to model various natural phenomena, such as Physarum slime mold finding a path, as described by Jones (2010). I've been playing around with this idea, adapting the principles of Physarum slime mold, but tweaking knobs in order to generate striking visuals.

Multi-Task Multi-Agent Reinforcement Learning Using Shared Distilled Policies.

Download the source code

Download report

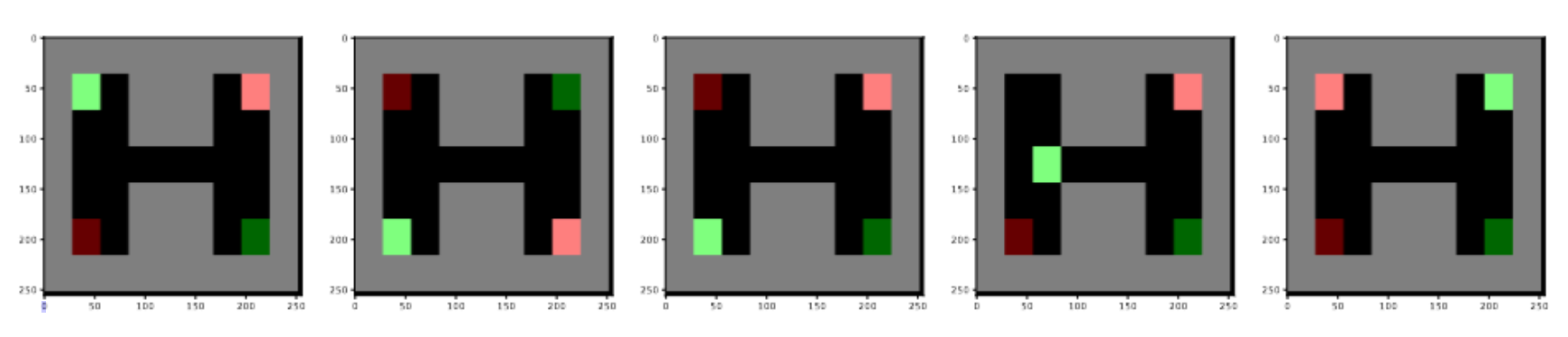

In reinforcement learning (RL), due to the similarity between tasks, an agent’s policy for one task is likely to share similarities with its policy for other tasks. This idea has been applied to single-agent RL to create the Distral Framework: An agent aims to learn a policy for each task, while constrained to find a policy that is similar to a shared policy. Based on this idea, we propose MultiDistral, an extension of Distral to the multi-agent setting. We show that MultiDistral outperforms a Q-Learning baseline given few games played.

Investigating Contrastive Pre-Training for Pixel-Wise Segmentation

Download the source code

Download report

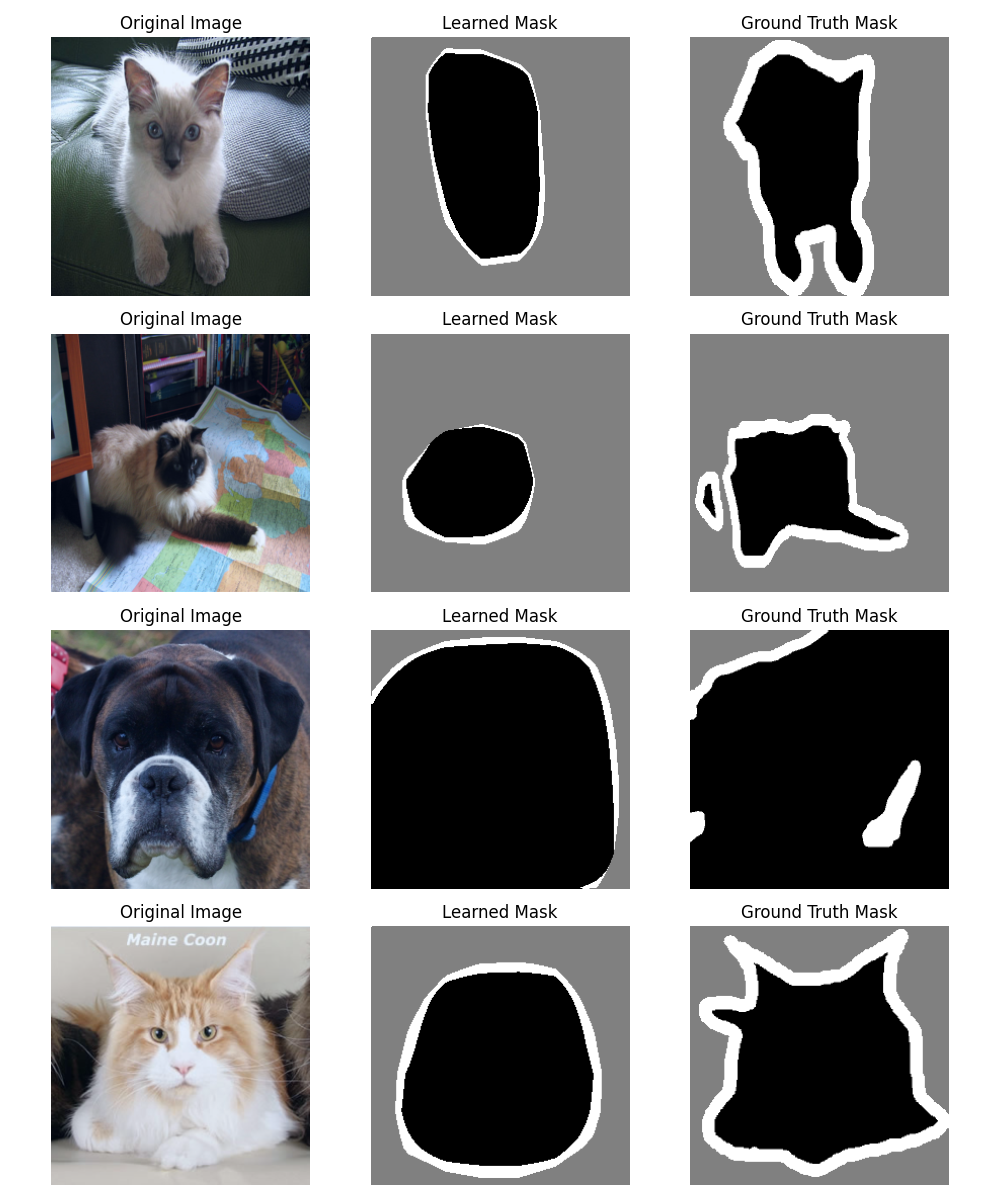

This project investigates the potential of using contrastive, i.e., self-supervised pre-training to leverage large quantities of unlabeled data for increased segmentation performance. We use the SimCLR framework for pre-training on the ImageNet dataset, thereby learning a disentangled latent space using simple transforms. This latent space is then used as the starting point of training a ResNet to perform the segmentation on the Oxford IIIT dataset. We observe a marginal performance increase with respect to the mask accuracy when pre-training is applied.

Reaction Diffusion

Download the code

Reaction diffusion is an algorithm simulating two substances width different properties diffuse in a system. There's a natural elegance to how this looks for certain parameters. It bares similarity to the patterns on the fur of certain animals, as well as other phenomena in nature. Karl Sims wrote a great tutorial on Reaction Diffusion, to be found here.

Markov chain Monte Carlo (MCMC) for text decryption

This project was implemented as part of the Probabilistic and Unsupervised Learning course taught by the Gatsby Computational Neuroscience Unit at UCL. Markov chain Monte Carlo (MCMC) methods are powerful tools in statistics, allowing us to approximate unknown probability distributions. Such an unknown probability distribution is the mapping from text sequences in a ciphertext to its decrypted form. If we know this distribution, then we can decrypt the ciphertext. We can use the Metropolis-Hastings algorithm, a method for constructing a so-called Markov chain using MCMC, to approximate a mapping from encrypted to decrypted text, thus effectively decrypting a ciphertext. The only prior knowledge required is the absolute probability of any one letter occurring in any text, which can easily be approximated by counting letters in a dictionary.

Counterfactual Regret Minimisation for Kuhn Poker

Download source code

Download report

Games act as a benchmark for artificial intelligence. They offer intuitive battlegrounds to test intelligence, the same way as humans use games to challenge and test themselves, be it the Olympic Games or Chess. There was a huge uproar when an AI called Deep Blue beat Chess grandmaster Garry Kasparov in 1997. The reason for this was simple: Being good at playing Chess is one of the definitive measures of intelligence. Ergo, if an AI can beat the world's best Chess player, then AI has superseded us. This logic doesn't quite hold up, as computers are distinctly good at Chess because Chess is a so-called perfect-information game. Both players have access to the same information, thus a computer can make use of its computational advantage and simulate many more possible outcomes than its opponent. Poker, on the other hand, is a so-called imperfect-information game, as each player possess information that isn't shared with the other players. This makes the brute-force strategy of simulating all possible futures infeasible, as the simulated outcomes hinge on information that isn't available. But there's a different approach which is explained by the field of game theory. The algorithm called Counterfactual Regret Minimisation (CFR) can be used to approximate a so-called Nash equilibrium, which is a set of strategies in an imperfect-information game where no player can unilaterally gain an advantage by changing their strategy alone. A strategy is thereby a probability distribution over all possible actions at each possible state. I implemented this on a simplified version of Poker called Kuhn Poker.